Shape-Based Analysis of Object Behaviour

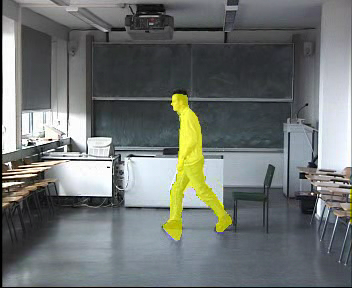

Once the shapes of the video objects have been determined by an automatic segmentation algorithm, it is interesting to apply further processing to extract semantically high information. For example, the obtained object masks can be used to identify the object and assign it to classes like human, car, bird, and so on. Furthermore, objects usually do not appear static, but they perform some action in the video sequence, which can also be analysed and assigned to sub-classes like walking human, standing human, or sitting human. The analysis of the sequence of object sub-classes over time can be considered as extraction of object behaviour.

A model for object behaviour

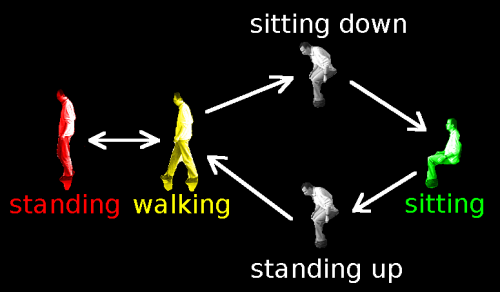

Real-world objects cannot suddenly change their class in an arbitrary way. For example, a human can never become a car for just a couple of frames, even if its shape suggests this. But, if we further subdivide each class into behaviour sub-classes, transitions may occur. Usually, even though an object can appear in all sub-classes, there are restrictions for state changes. These transitions can be described by a state-transition graph as shown below. According to this model, a human can walk, stand, and sit, but prior to sitting, he has to pass the sitting-down state first. The possible transitions can also be weighted, such that sitting-down has a higher cost (because it is not so probable) as just continuing to walk.

Assigning object classes

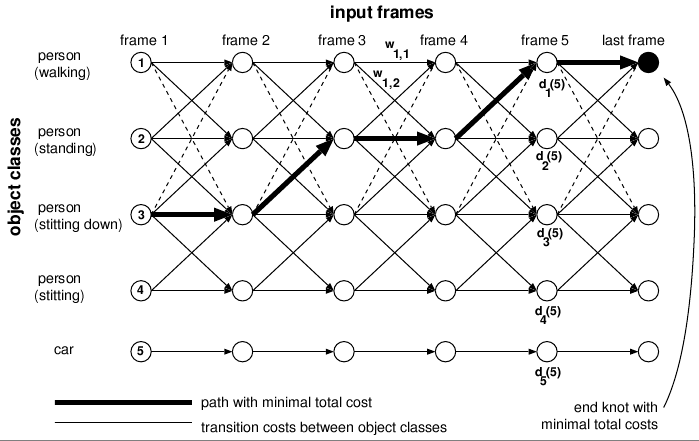

Using the state-transition probabilities and an observation model, based on comparing the shape of the segmented object with a database of prototype-shapes, we build a model that describes the per-frame classification of the object. The most probable solution can be efficiently computed with a dynamic programming approach as shown below.