| Automatic Video Segmentation

Employing Object/Camera Modeling Techniques |

|||

| Dirk Farin | |||

|

|

|

| Welcome to the homepage of

my PhD thesis ! On this page, you can access the full text and also get more information for many chapters, like example results and demo movies. Have fun browsing through all of it and don't hessitate to ask for further information in case you got interested. Dirk Farin |

Abstract

Practically established video compression and storage techniques still process video sequences as rectangular images without further semantic structure. However, humans watching a video sequence immediately recognize acting objects as semantic units. This semantic object separation is currently not reflected in the technical system, making it difficult to manipulate the video at the object level. The realization of object-based manipulation will introduce many new possibilities for working with videos, like composing new scenes from pre-existing video objects or enabling user-interaction with the scene.

A prerequisite for employing object-based video processing is automatic (or at least user-assisted semi-automatic) segmentation of the input video into semantic units, the video objects. This segmentation is a difficult problem because the computer does not have the vast amount of pre-knowledge that humans subconsciously use for object detection. Thus, even the simple definition of the desired output of a segmentation system is difficult. The subject of this thesis is to provide algorithms for segmentation that are applicable to common video material and that are computationally efficient.

- First, a segmentation system based on a background-subtraction technique is described. The pure background image that is required for this technique is synthesized from the input video itself. Sequences that contain rotational camera motion can also be processed since the camera motion is estimated and the input images are aligned into a panoramic scene-background.

- Afterwards, the described segmentation system is extended to consider object models in the analysis. Object models allow the user to specify which objects should be extracted from the video. The object detection uses a graph-based object model in which the features of the main object regions are summarized in the graph nodes and the spatial relations between these regions are expressed with the graph edges.

- Finally, different techniques to derive further information about the physical 3-D world from the camera motion are presented. For example, an extension to the camera-motion estimation is introduced in order to factorize the motion parameters into physically meaningful parameters (rotation angles, focal-length) using camera autocalibration techniques.

| Table of Contents (pdf) | |

| Chapter

1: Introduction (pdf) This chapter presents a

survey of typical application areas that apply segmentation algorithms.

This includes video editing, compression, content analysis, and 3-D

reconstruction applications. A particular focus is on the concept of

object-oriented video coding in the MPEG-4 standard. Afterwards, the

requirements are defined for a segmentation system that is compliant

with the MPEG-4 video-coding approach. A proposal for such a

segmentation system is made and the main components are briefly

introduced.

|

|

| Part I: An Automatic Video Segmentation System | |

| Chapter

2: Projective Geometry (pdf) This chapter gives an

introduction to projective geometry as far as it is required to

understand the subsequent chapters. We start with defining the

projective space and deriving basic operations on points and lines in

it. We proceed by discussing geometric transformations in the

projective plane and in the 3-D Euclidean space, including the

projection of 3-D space onto a 2-D image plane. Finally, we construct a

detailed model of the image formation process for a moving camera.

|

|

| Chapter

3: Feature-based Motion I: Point-Correspondences (pdf) A feature-based motion estimator

comprises three steps: detection of feature-points, establishing

feature-correspondences, and estimation of camera motion parameters. In

this chapter, we give an introduction to feature-based motion

estimation and we cover the first two steps. We provide a survey of

feature-point detectors and evaluate their accuracy. After that, we

present a fast algorithm for determining correspondences between two

sets of feature-points.

|

|

| Chapter

4: Feature-based Motion II: Parameter Estimation (pdf) This chapter describes the

algorithm for computing the parameters of the projective motion model,

including the case of images with multiple independent motions. To

extract the dominant motion model in this case, we apply the RANSAC

algorithm. An evaluation of the robust estimation algorithm shows

that the accuracy of the results in practice is worse than

expected from a theoretic evaluation. However, after analyzing this

discrepancy, we propose a modification to reach the theoretical

performance.

|

|

| Chapter

5: Background Reconstruction (pdf) In order to synthesize a

background image of a scene recorded with a rotational camera, we first

increase the accuracy of the camera-motion parameters such that

long-term consistency is achieved. After that, the input frames are

combined such that moving foreground objects are removed. We

review some of the existing approaches and present a new algorithm for

background estimation which is designed for difficult sequences where

the background is only visible for a short time period.

|

|

| Chapter

6: Multi-Sprite Backgrounds (pdf) The MPEG-4 standard enables to

send a background image (sprite) independently from the foreground

objects. Whereas it seems optimal to combine as many images into one

background sprite as possible, we have found that the counter-intuitive

approach of splitting the background into several independent parts can

reduce the overall amount of data needed to transmit the background

sprite.

We propose an algorithm that provides an optimal partitioning of a video sequence into independent background sprites, resulting in a significant reduction of the involved coding cost. (Content of this chapter has received two best paper awards.) |

|

| Chapter

7: Background Subtraction (pdf) We discuss the

background-subtraction module, starting with simple independent pixel

classification and then proceeding to more complex tests that include

contextual information to decrease the number classification errors.

Furthermore, typical problems that lead to segmentation errors are

identified and a modification to the segmentation algorithm is provided

to reduce these effects.

Finally, a few postprocessing filters are presented that can remove obvious errors like small clutter regions. |

|

| Chapter

8: Results and Applications (pdf) This chapter describes several

variants how to combine the algorithms that were presented in Chapters

2-7 into a complete segmentation system. Results are provided

for a large variety of sequences to show the quality of th

segmentation masks, but also to show the limitations of the

proposed approach. Algorithm enhancements to overcome these limitations

are proposed for future work. Finally, we provide examples for applying

segmentation system in MPEG-4 video coding, 3-D video generation,

video editing, and video analysis.

|

|

| Part II: Segmentation Using Object Models | |

| Chapter

9: Object Detection based on Graph-Models I: Cartoons (pdf) Sometimes it is desired to

specify which objects should be extracted from a picture. This and

the following chapter present a graph-based model to describe video

objects. The model-detection algorithm is based on an inexact

graph-matching between the user-defined object model and an

automatically extracted graph covering the input image. We discuss the

model-generation process, and we present a matching algorithm

tailored to the object detection in cartoon sequences.

|

|

| Chapter

10: Object Detection based on Graph-Models II: Natural (pdf) This chapter presents an

algorithm for video-object segmentation that combines motion

information, a high-level object-model detection, and spatial

segmentation into a single framework. This joint approach overcomes the

disadvantages of these algorithms when they are applied independently.

These disadvantages include the low semantic accuracy of spatial color

segmentation, the inexact object boundaries obtained from

object-model matching and the often incomplete motion information.

|

|

| Chapter

11: Manual Segmentation and Signature Tracking (pdf) For some applications it can be

advantageous to use semi-automatic segmentation techniques, in which

the user controls the segmentation manually. A popular approach is

the Intelligent Scissors tool, which uses shortest-path algorithms

to locate the object contour between two user-supplied control

points. We propose a new segmentation tool, where the user specifies a

rough corridor along the object boundary, instead of placing

control points. This tool is based on a new algorithm for computing

circular shortest paths. Finally, the algorithm is extended with a

tracking component for video data.

|

|

| Part III: From Camera Motion to 3-D Models | |

| Chapter

12: Estimation of Physical Camera Parameters (pdf) We discuss the problem of

calculating the physical motion parameters from the frame-to-frame

homographies. These camera-motion parameters represent a valuable

feature for sequence classification, or augmented-reality applications

that

insert computer-generated 3-D objects into a natural scene. We present two algorithm variants: the first algorithm applies a fast linear optimization approach, whereas the second algorithm uses a non-linear bundle-adjustment algorithm that provides a high accuracy. Both algorithms have been combined with our multi-sprite technique to support unrestricted camera motion. |

|

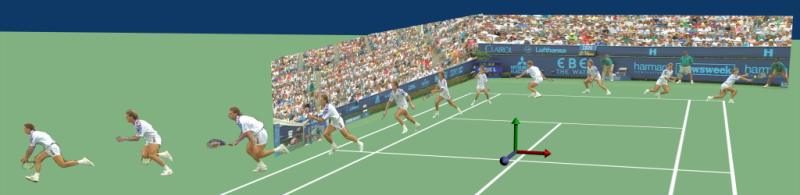

| Chapter 13: Camera Calibration for the

Analysis of Sport Videos (pdf) For the in-depth analysis of

sports like tennis or soccer, it is required to know the positions

of the players on the tennis court or soccer field. To obtain these

positions, it is necessary to establish the transformation between

image coordinates and real-world coordinates. We employ a model of the

playing field to define the real-world reference coordinate system

and we describe a new algorithm that localize the user-defined

geometric model in an input image in order to find the geometric

transformation between both.

|

|

| Chapter

14: Panoramic Video and Floor Plan Reconstruction (pdf) Previously,

we have used a planar projection of the scene background. However, the

most frequently-used model for panoramic images is the cylindrical

panorama. Since cylindrical images are a special kind of image with

geometric distortions, their contents are not always easy to

interpret. Therefore, we propose new visualization technique that

reconstructs the geometry of the room in which the panoramic image

was recorded. Finally, this concept is generalized to reconstruct a

complete floor plan based on multiple panoramic images.

Additionally to the aforementioned improved visualization, this enables

new applications like the presentation of real estate with virtual

tours through the appartment.

|

|

| Chapter

15: Conclusions (pdf) This thesis has presented various

techniques for video-object segmentation. An automatic segmentation

system for rotating cameras was presented and extended with

object-model controlled segmentation and camera autocalibration. In

this chapter, the achievements are summarized and it is discussed

how these techniques could be enhanced in future research. Finally,

interesting research directions are highlighted that may be

promising approaches for future video-segmentation systems.

|

|

| Appendices | |

| Appendix

A: Video-Summarization with Scene Preknowledge (pdf) For long video sequences, it is

not easy to quickly get an overview of the content.

If the video content could be summarized with a collection of representative key-frames, the user could get an immediate overview. This appendix presents a new two-step clustering-based algorithm. The first stage provides a soft form of shot separation, determining periods of stable content. The second clustering stage then selects the final set of key-frames. Image content which is not desired in the summary can be excluded by providing sample images of shots to exclude. |

|

| Appendix

B: Efficient Computation of Homographies From Four Correspondences

(pdf) The usual way to compute the

parameters of a projective transform from four

point coordinates is to set up an 8x8 equation system. This appendix describes an algorithm to obtain these parameters with only the inversion of a 3x3 equation system. |

|

| Appendix

C: Robust Motion Estimation with LTS and LMedS (pdf) In Chapter 4, we employed the

RANSAC algorithm to calculate global-motion parameters for a set of

point-correspondences between two images. One disadvantage of the

RANSAC algorithm is its dependence on the inlier threshold.

In this chapter, we look at two alternative algorithms: Least-Median-of-Squares (LMedS), and Least-Trimmed-Squares (LTS). |

|

| Appendix D: Additional Test Sequences (pdf) | |

| Appendix

E: Color Segmentation Using Region Merging (pdf) In this appendix, we briefly

introduce the region-merging algorithm and present several merging

criteria and evaluate their performance in terms of noise robustness

and subjective segmentation quality. Furthermore, we introduce a

new merging criterion yielding a better subjective segmentation

quality, and propose to change to merging criterion during

processing to further increase the overall robustness and segmentation

quality. (Content

of this chapter has received a best paper award.)

|

|

| Appendix F: Shape-Based Analysis of Object

Behaviour (pdf) One example application of

segmentation is to use the obtained object masks to identify the

object. Since objects usually do not appear static, but perform some

motion, this can also be analysed and assigned to sub-classes like

"walking human", or "sitting human". We combined a

classification into several pre-defined classes with a model

of the transition probability between these classes over time. Having a

model that describes the transitions between classes makes the

classification more robust than an independent classification for

each input frame.

|

|

| References, Summary, and Biography (pdf) | |

| Thesis statements (Stellingen) (pdf) | |

The full text of the PhD thesis is also available as PDF (60 MB, 561 pages, excluding thesis statements).

There are a few printed copies left (two books with partly colored figures). If you would like to receive one of them, simply contact me by .